Table of Contents

Start Page » Module System » OpenGL Graphic Module » Occlusion Testing

There exist different possibilities to handle occlusion testing for rendering. These techniques can be roughly classified into CPU and GPU based techniques.

CPU based Culling

CPU based techniques include techniques like BSP portal systems with potential visibility sets (PVS), stand alone portal systems similar to the one used so far in the OpenGL Graphic Module or view blockers.

BSP Portal System

BSP based systems create a binary portal system based on the BSP tree. This technique has been made popular by the Quake based engines. PVS is a precomputation step calculating for all rooms in the BSP portal system which other rooms are potentially visible hence the name potentially visibility set.

This technique works best with static indoor scenes. The fragmentation in portal rooms increases though a lot with increasing scene complexity since portal generation is an automatic process. Furthermore for dynamic scenes the BSP based system is not suitable since changes in the portal system structure would invalidate the PVS which is time consuming to calculate.

This technique is not suitable for a game engine allowing all kinds of scenes.

Drag[en]gine Portal System

The portal system used so far in the OpenGL Graphic Module is based on a similar approach but the portal system is hand made by the artist. This allows to keep the complexity of the portal system to an utmost minimum which improves performance compared to the BSP portal system by a great deal. Visibily is calculated using frustum culling algorithms applied on the portal mesh.

This technique is fast if the complexity of the portal mesh is low. This works also with dynamic portal meshes since no precalculation of a PVS of any kind is done. Still with outdoor systems this kind of portal system is not the best choice. For this a better solution has been required which in the end fully replaces this portal system technique too.

Blocker Culling

Another possible technique is using blocker meshes. The idea behind blocker meshes is the observation that if an occluder is large enough object objects behind the occluder are fully invisible if they are fully included in the frustum produced by the occluder and the camera location. The advantage of this technique is that for testing a box or sphere (bounding volumes are enough) against a frustum there exist fast collision detection algorithms.

This kind of culling is quite fast but suffers from overlapping culling problem. Overlapping culling refers to objects overlapping two or more occluders. If an object is covered by more than one culling object it is time consuming to figure out if the object is fully invisible or not since the object fails to be fully inside both culling frustums. The most common solution is to consider the object visible and call it a day since doing complex analytical testing of invisibility can cost more than simply sending the object down the render pipeline although invisible.

The OpenGL Module used blocker culling so far in addition to portal systems. With the new culling system though blocker culling is not required anymore. It can though still be used as a broad scale pre-culling for objects before doing the actual culling. This might come though perhaps in the future.

CPU/GPU based culling

The logical conclusion with the limited CPU systems is to think about taking it to the hardware instead. Some culling systems take advantage of the GPU for culling calculations. The basic idea is to let the rastarization of the graphic card and the depth buffer taking care of handling overlapping culling and dynamic occluders. Most of the time the CPU is still required to read back the result from the GPU calculation. In some cases the culling can be left fully on the GPU but in general this read-back to the CPU is required. This read-back is also the main problem of the CPU/GPU based culling techniques. The GPU is not designed to do fast reading back but to do fast writing/rendering. As soon as a read-back is done GPU stalling happens which reduces performances a lot.

Hardware Occlusion Query

The first solution invented to deal with moving occlusion testing to the hardware has been what is called hardware occlusion query or just occlusion query for short. Occlusion query is an extension for OpenGL which has seen various variations. nVidia, ATI and at the beginning HP each had their own version made for their graphic cards and chips. Later on this has been united into the ARB_occlusion_query extension. Basically occlusion query allows to create a query object and start a query on it. Then the bounding box of the object to occlusion test is rendered with a bare bone shader to be as fast as possible. Then the query is closed and the result read back. The result is an integer value stating how many pixels have been written to the screen. The actual culling work is done here by the depth buffer. This in turn requires a pre-depth pass to be rendered with potential occluders before doing queries. This thus counts all the pixels of the bounding box of the object to query that are in front of any blocking geometry.

This screen space approach is very simple and logic. While it sounds great in theory it is anything else but great in reality. You can do only one query at the time and for each query you need to render a box to the screen. This limits the number of objects you can reasonably query to only a handful. Testing a large number of objects is simply not possible. Furthermore one query typically takes 2-5ms to complete depending on the size of the tested object. Occlusion query is thus way too slow and inefficient to be used for a large scale culling of many objects on the screen.

For this Graphic Module this kind of culling limitations are just not useful.

Hierarchical Z-Culling / Occlusion Maps

An interesting idea now is to take the hardware based culling to a pure image space based solution skipping hardware depth buffer testing and occlusion queries altogether. Many developers (especially AAAs) forget that the GPU is not just a rendering machine with fancy shaders but that it's a massive SIMD mathematical work horse. By moving from looking at the GPU as a render-machine to looking at it as a math-unit you can transform the problem into another one which has a clever solution. These techniques are typically called hierarchical z-culling or hierarchical occlusion maps (HOM).

The idea is to take a depth buffer as an image and building a pyramid out of it. When we do occlusion testing we are interested in figuring out if a point of interest on our object is located behind occluders. The basic observation is that if we take a collection of points then the object is only hidden if the most front object point has a smaller depth than the most back occluder point from all the points we look at. With other words if we shrink the depth image down by a factor of 2 or 4 and we compare the points on these scales we still get the right solution (erroring on visible than not visible) as if we compare all pixels on the highest resolution. Using a depth image pyramid all the way down to a 2×2 image we can compare our objects with a fixed number of tests by simply picking the layer in the pyramid covering our objects with the maximal number of pixels we want to spend for the test.

HOM systems read the depth image pyramid back to the CPU to carry out the occlusion testing there. This Graphic Module goes some steps further.

The OpenGL Graphic Module Culling System

For this advanced culling version the Occlusion Mesh System is used. One of the main problems with hardware occlusion culling is that rendering the depth pass is already a source of slow-down. During the depth pass we don't know yet what objects will be culled. So which objects to choose to render for the depth pass? We don't want to waste rendering a depth buffer killing our culling performance. For this reason the OpenGL Graphic Module uses the occlusion meshes for rendering an occlusion map.

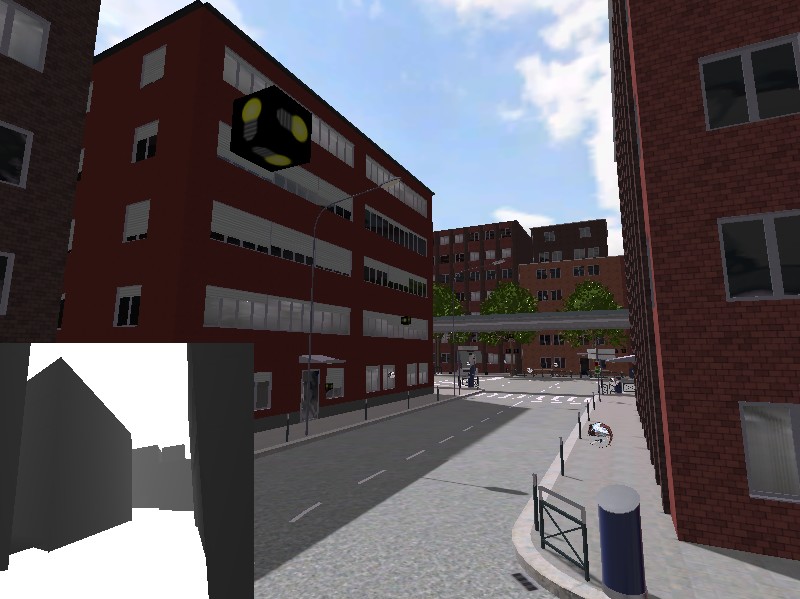

Since we render now an image which has nothing to do with the actual screen content we can use any resolution we want. In fact the occlusion map is a 256×256 image. Smaller image size equals to faster rendering and fast is what we want for our culling algorithm. Since occlusion meshes are already an abstraction of the work and in general of low polygon count the rendering of the occlusion map is very fast.

Once rendered a mip-map pyramid is generated for the occlusion map. Normal mip-mapping calculates the average for the collapsed pixels. This is though wrong for occlusion culling. For this the mip-mapping process is simulated using shaders which in turn calculate the maximum pixel value instead of the average. Each layer in the occlusion map contains thus the farthest away occluder point at any resolution. What is now left is testing.

In contrary to HOM and similar algorithms though the testing is done not on the CPU but on the GPU itself. All objects where culling could be useful (right now these are all objects) are then asked to submit their culling test parameters. Depending on the capabilities of the hardware these parameters are either placed in a texture or in a VBO stream (required EXT_transform_feedback or similar). Then all parameters are rendered with one full screen quad. This is the beauty of this technique. You can occlusion test thousands of objects with one single quadrilateral rendered. The shader simply reads the test parameters from the texture or the stream, picks the occlusion map layer covering the object in screen space in at most 16 pixels and tests if at least one of them are visible. 16 pixels provides a good sampling resolution while still being fast. With decreasing sampling density the number of objects classified as visible although they are invisible increases. This is though a neglectable problem compared to the massive processing throughput. The result is written as a single component color.

Now the result is read back to the CPU. In contrary to HOM and similar techniques we read back the actual results not the occlusion image. The advantage of this is that we have to read back a lot less data. For each result we read back only a single color value, hence an 8-bit value. This is very fast and produces next to no stalling compared to occlusion queries. With this technique 10'000 objects can be tested in 3-4ms (on an ATI Radeon 4870 HD, nVidia is faster on this technique but slower at other parts). In the same time with occlusion queries you can test maybe a handful of objects. Here though you can test entire cities if this is what you need.

This technique is so wonderful because it needs no fancy graphic card abilities or extensions nor complex and error prone calculations. All you need is filling a texture, use some nifty shaders and reading the texture back to the CPU.

Further optimizations are possible like using geometry shaders to cull objects while rendering. This would not require a read-back. Already now though this technique can be already used to calculate visibility for thousands of objects in real-time per frame-update.